A Beginner’s Guide to Using a VLM

In recent years, there have been exciting advances in computer vision and natural language processing. However, many real-world problems are inherently multimodal — they involve several distinct forms of data, such as images and text.

Here comes the Vision-Language Models (VLMs) which have emerged as a groundbreaking development that bridges the gap between text and images. These models can understand and generate textual descriptions for visual content, enabling many applications, from automated image captioning to advanced visual question-answering systems.

BLIP as well as BLIP-2 stands for Bootstrapping Language-Image Pre-training for unified vision-language understanding and generation. They are vision-language pre-training models.

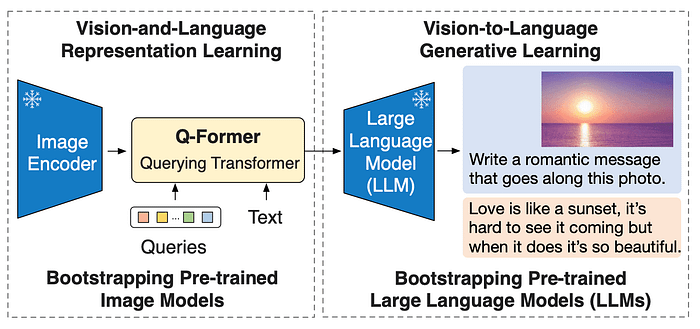

The BLIP-2 model consists of three main components: a frozen image encoder, a frozen Large Language Model (LLM), and a Querying Transformer (Q-Former).

- Frozen Image Encoder: This processes the image input and extracts visual features.

- Frozen Large Language Model (LLM): This processes the text input.

- Querying Transformer (Q-Former): This employs a set of learnable query vectors to extract visual features from the image encoder that are relevant to the text instruction.

Q-Former is the only trainable part of BLIP-2; where both the image encoder and language model remain frozen.

Q-Former is a transformer model that consists of two submodules that share the same self-attention layers:

- An image transformer that interacts with the frozen image encoder for visual feature extraction

- A text transformer that can function as both a text encoder and a text decoder

This was such a brief overview of VLMs and an introduction to BLIP-2.

Let’s see how we can get hands-on using a VLM!

Step-by-step guide on how to use BLIP-2 for basic tasks with zero-shot learning:

(Zero-shot learning (ZSL) is a machine learning paradigm where a model learns to generalize to unseen classes or tasks without having any specific examples or training data for those classes.) — which means here I input a new image that the model was not trained with!!

First, we’ll install transformers.

pip install git+https://github.com/huggingface/transformers.gitNext, we need to have an input image.

import requests

from PIL import Image

url = 'https://assets.dmagstatic.com/wp-content/uploads/2016/05/dogs-playing-in-grass.jpg'

image = Image.open(requests.get(url, stream=True).raw).convert('RGB')

display(image.resize((596, 437)))I chose the following image for our trial.

We need a pre-trained BLIP-2 model and a corresponding preprocessor to prepare the inputs.

from transformers import AutoProcessor, Blip2ForConditionalGeneration

import torch

processor = AutoProcessor.from_pretrained("Salesforce/blip2-opt-2.7b")

model = Blip2ForConditionalGeneration.from_pretrained("Salesforce/blip2-opt-2.7b", torch_dtype=torch.float16)Also, we used GPU to make the text generation faster.

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)Image Captioning

Let’s see how we got the beautiful photo of the dogs captioned.

inputs = processor(image, return_tensors="pt").to(device, torch.float16)

generated_ids = model.generate(**inputs, max_new_tokens=20)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)[0].strip()

print(generated_text)two dogs running in the grass with a tennis ballPrompted image captioning

We can provide a part of a sentence to the model, to it to continue with — which is called prompted captioning here.

prompt = "this is a photo of"

inputs = processor(image, text=prompt, return_tensors="pt").to(device, torch.float16)

generated_ids = model.generate(**inputs, max_new_tokens=20)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)[0].strip()

print(generated_text)two dogs running in the grassVisual question answering

This is to be provided in the specific way of “Question: {} Answer:” For the model would answer based on the question referring to the image.

prompt = "Question: What is a dog holding? Answer:"

inputs = processor(image, text=prompt, return_tensors="pt").to(device, torch.float16)

generated_ids = model.generate(**inputs, max_new_tokens=10)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)[0].strip()

print(generated_text)A tennis ballChat-based prompting

This is the most interesting section where you get to have a conversation on the image!!

context = [

("What is a dog holding?", "a tennis ball"),

("Where are they?", "On the grass.")

]

question = "What for?"

template = "Question: {} Answer: {}."

prompt = " ".join([template.format(context[i][0], context[i][1]) for i in range(len(context))]) + " Question: " + question + " Answer:"

print(prompt)Question: What is a dog holding? Answer: a tennis ball. Question: Where are they? Answer: On the grass.. Question: What for? Answer:inputs = processor(image, text=prompt, return_tensors="pt").to(device, torch.float16)

generated_ids = model.generate(**inputs, max_new_tokens=10)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)[0].strip()

print(generated_text)To play with.BLIP-2 is a zero-shot visual-language model capable of handling various image-to-text tasks using prompts of both images and text. It’s a highly effective and efficient method for enhancing image comprehension across different applications.

Hoping to share something more exciting in my upcoming article! Stay tuned!

References:

What is Image-to-Text? — Hugging Face

Zero-shot image-to-text generation with BLIP-2 (huggingface.co)

Exploring Vision-Language Models: A Comprehensive Overview | by Lets Code AI | Medium

BLIP-2: A Detailed Look at the Architecture, Training, and Inference | by shashank Jain | Medium